How many planets are there? This is a question that could be answered quite a few different ways depending on your scientific definition of planet and whether or not you grew up in the 80’s and your favorite planet was Pluto.

Perplexed, I decided to ask ChatGPT for some help – I thought doing so would finally put the issue to rest! Unfortunately, just like us humans might, ChatGPT responded with a variety of answers. Even the “age” of the model used came into play when generating a response, although I suppose ChatGPT doesn’t harbor any favorites.

https://platform.openai.com/docs/models

gpt-4o

With the recent release of gpt-4o, I decided to complete a structured benchmark test using the OpenAI API – this is the technology that allows me to write my own software that connects to ChatGPT.

Here are the models I used to complete the benchmark test:

- gpt-4o

- gpt-4-turbo

- gpt-4

- gpt-3.5-turbo

Each of these LLMs (large language models) were released at different times over the last two years, which is what gives them their “age.” You will see how each model responds to the following prompt:

How many planets are there?

This prompt was sent to each of the four models 10 times. When using the OpenAI API, you have a lot more capabilities that allow you to shape the response using parameters such as temperature and frequency penalty. All of these tests were completed the same way using default values for all parameters.

https://platform.openai.com/docs/api-reference/chat

Also recorded is the amount of time it takes (in seconds) to receive each response. Here are the results:

Response Time

With each model iteration of ChatGPT, OpenAI has been trying to increase performance by reducing the amount of time it takes to receive a response. They’ve even named their models using words like “turbo” to imply that the responses would be faster than their non-turbo counterpart, although turbo is slower here!

In the case of such a short prompt / reply combination, the amount of time taken per response on average by each model seems negligible.

- gpt-4o, 3-4 seconds

- gpt-4-turbo, 4-5 seconds

- gpt-4, 1-3 seconds

- gpt-3.5-turbo, 1 second

Response Length

At a glance, you will notice that each model produces, on average, a different sized response. The gpt-4o and gpt-4-turbo models each respond with more text than the other two, highlighting what seem to be more thought out answers to my question. While gpt-4o and gpt-4-turbo have very similar responses, one thing I noticed was that gpt-4o adds a lot of extra symbols and punctuation marks in the text. One example you might notice is asterisks around the planet names.

As of our current astronomical knowledge, there are eight recognized planets in our Solar System. These are, in order from the Sun: 1. **Mercury** 2. **Venus** 3. **Earth** 4. **Mars** 5. **Jupiter** 6. **Saturn** 7. **Uranus** 8. **Neptune** Pluto was originally classified as the ninth planet but was reclassified as a “dwarf planet” by the International Astronomical Union (IAU) in 2006. In addition to our Solar System, many exoplanets (planets outside our Solar System) have been discovered orbiting other stars. As of now, thousands of such exoplanets have been detected, and the number continues to grow as detection methods improve.

Sample gpt-4o response

As of the latest updates, there are eight planets in our solar system. These are, in order from closest to the sun: Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus, and Neptune. Pluto, which was once considered the ninth planet, was reclassified as a dwarf planet by the International Astronomical Union in 2006. The count of planets can vary if considering exoplanets, which are planets orbiting stars other than our Sun. Thousands of exoplanets have been discovered in various star systems across the galaxy.

Sample gpt-4-turbo response

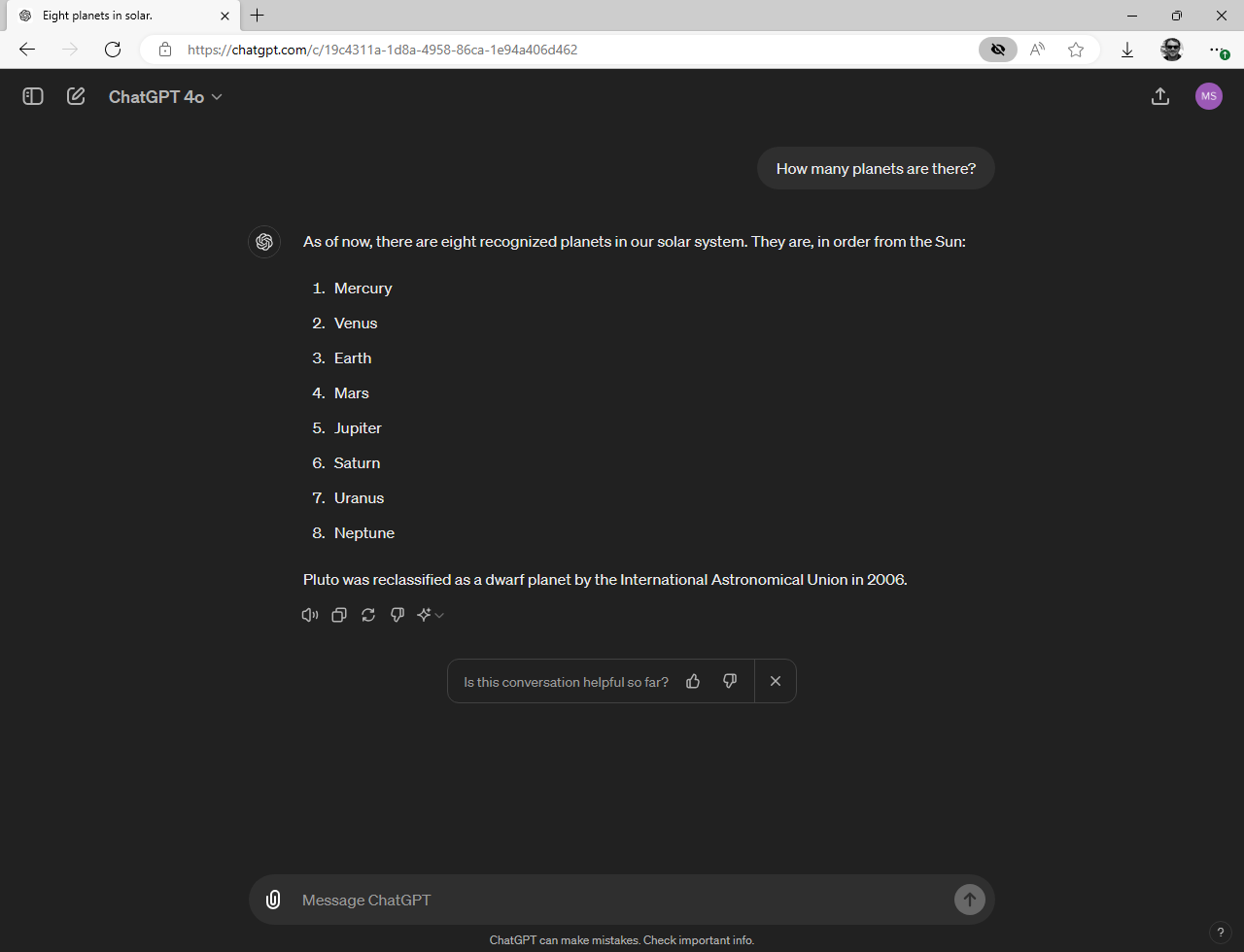

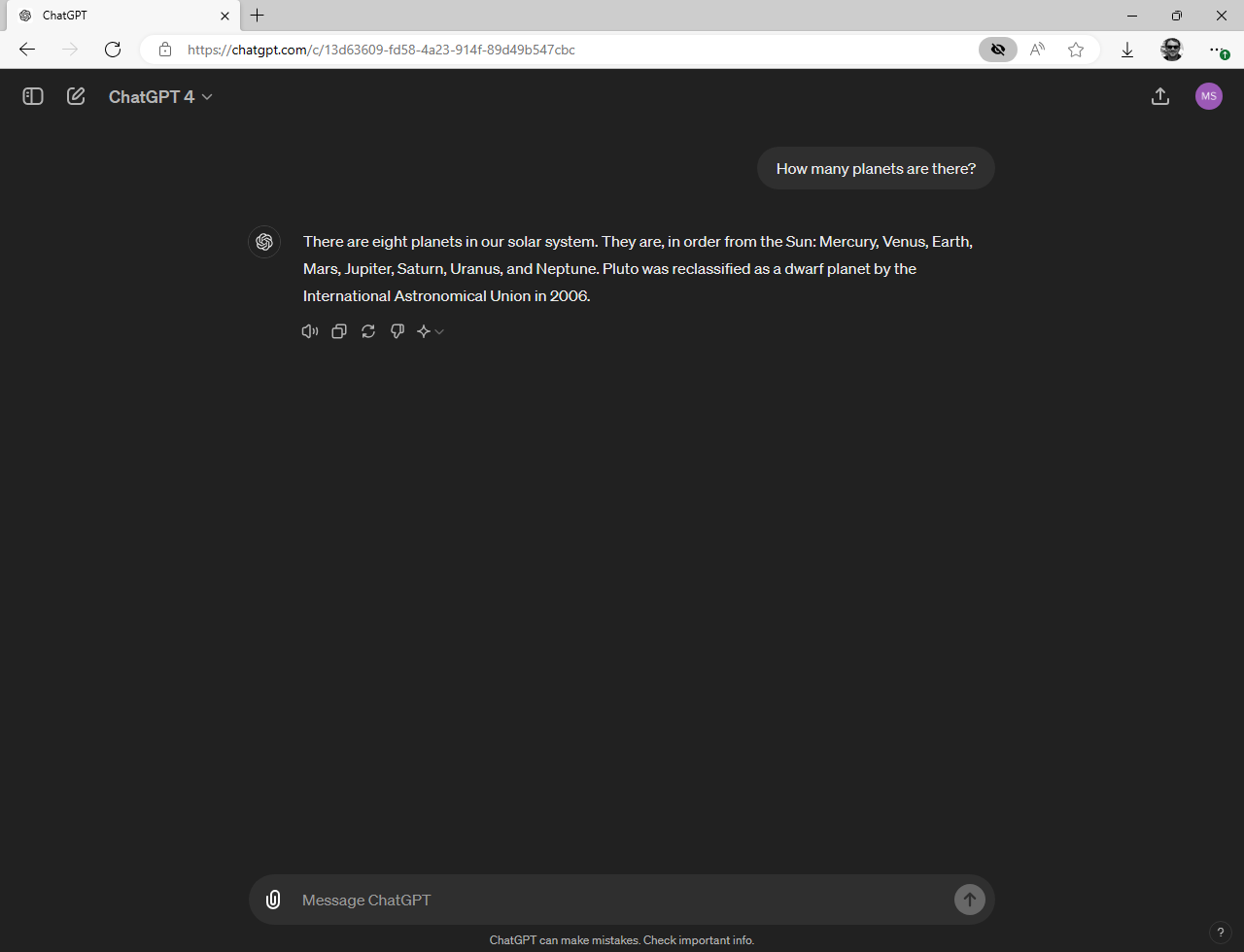

Why the extra asterisks and punctuation? My theory is that the responses are designed for the ChatGPT web application. Here is what the responses look like in that context. Notice that the gpt-4o model lists the data out in an HTML ordered list, compared to the other, which does not.

As for the other two older models, the responses were shorter in length and more to the point. I remember the good ol’ days when ChatGPT first came out and the responses were more like this and less bloated; it was great!

There are 8 planets in our solar system.

Sample gpt-4 reponse

There are eight planets in our solar system: Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus, and Neptune.

Sample gpt-3.5-turbo response

Response Accuracy

Each model seemed to have a “correct” answer to my prompt. The two newer models, gpt-4o and gpt-4-turbo, usually would describe in detail how the answer represented planets in our own solar system and how, in 2006, Pluto was reclassified by the IAU (International Astronomical Union). Sometimes the response also included the fact that there are exoplanets in our universe. Remember, in my prompt, I did not specify that I wanted only the planets in our solar system.

These first two models would also provide in the response the year in which they were last trained with current information. This is where I found a weird inaccuracy with the gpt-4o training… Notice in the detailed results where one response says the last update was 2021 and another response says the last update was 2023. Which is it?! The gpt-4-turbo model didn’t seem to have that problem.

The other two models, gpt-4 and gpt-3.5-turbo, each provided accurate results in their responses and left Pluto out of the count. These models did not, however, explain to me anything about the IAU or why my beloved Pluto was left off the list, and so I’m left confused because growing up I was taught that there are nine planets.

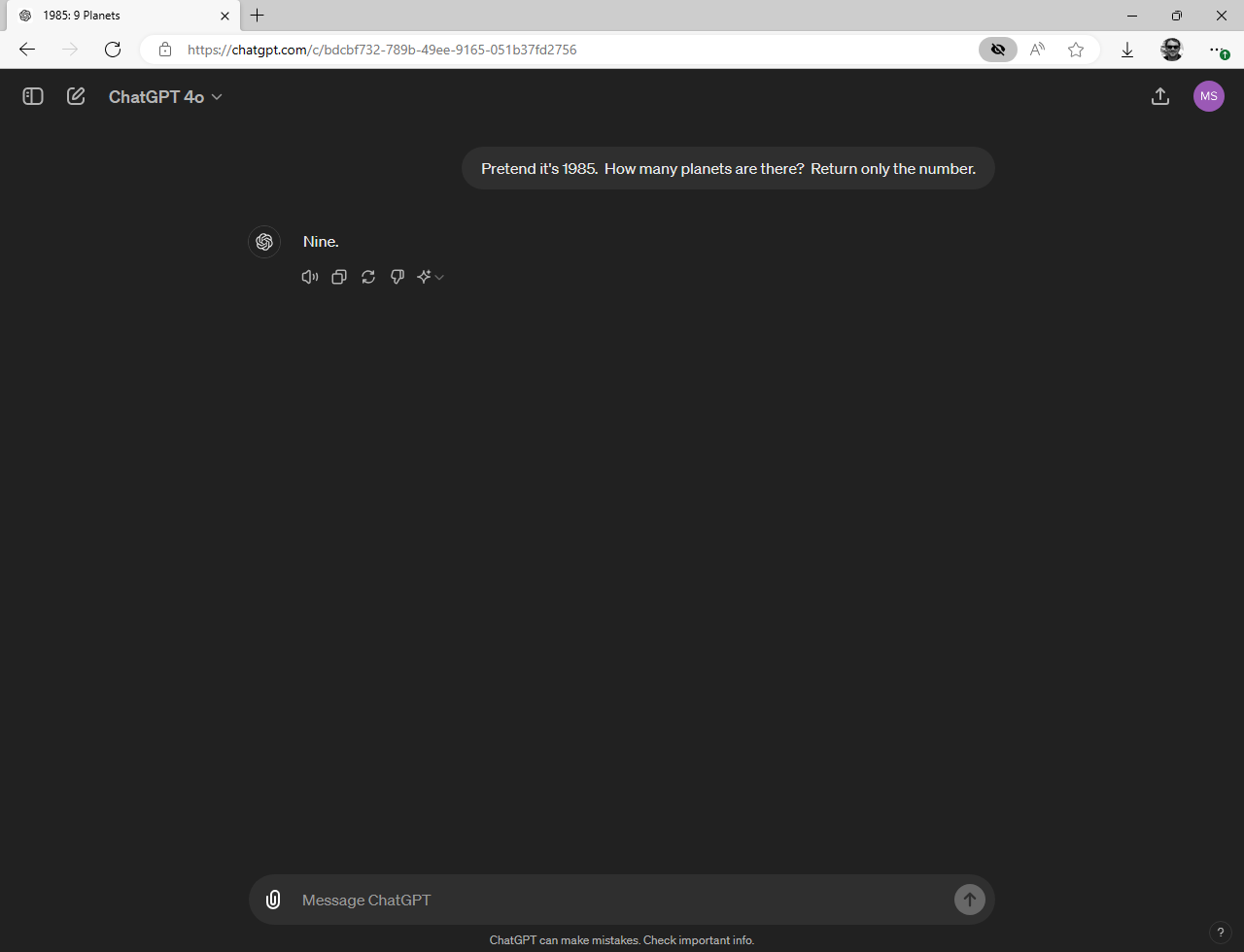

Changing the Prompt

The responses you get from ChatGPT are only going to be as accurate as the prompts you send. There’s an acronym we see around computer science, GIGO, which stands for garbage in equals garbage out. In the context of ChatGPT, this means that if your prompt is not well formed, you can expect the response to also not be well formed. Keeping this in mind, I was finally able to get ChatGPT to respond with the answer I was looking for. Yay!

During the benchmark tests, however, I used a simple short prompt and was provided with a few responses that were somewhat vague. In fact, the gpt-3.5-turbo model never even mentioned that planets besides the eight closest to our Sun even exist, so if what I meant was “how many planets are there in the entire universe” when I asked my question, I would be left thinking that we all live together on one of only eight planets in the entirety of existence. How lucky!

Might not be true, but being here is still pretty incredible 🙂

Here is a wide, photorealistic image depicting Pluto being welcomed back into the solar system.

Note – The images on this site are created using ChatGPT.